Public school teachers and principals deserve fair treatment on important decisions about who should be retained and who should be fired. They should not be fired based on student test scores because the variation in student test scores is random. It is no more reliable than a coin toss. How wise would it be to fire doctors or lawyers based on a coin toss? Heads they stay. Tails they go. Imagine what this would do the morale of staff who had also most no control over whether they stayed or were fired. In this report, we will look at the scientific research (or lack of it) on using student test scores to evaluate teachers.

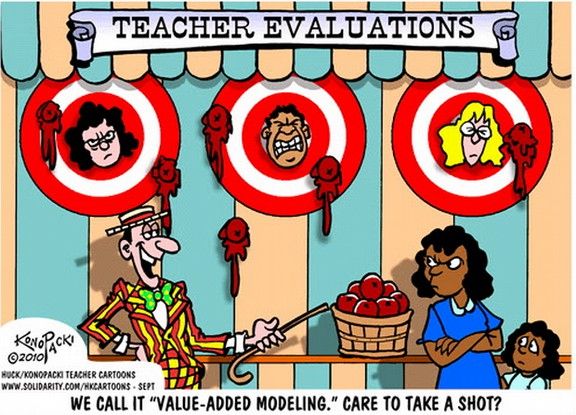

What is Value Added Modeling (VAM)?

The idea behind value added modeling is that you add up all of the high stakes test scores of a teachers students and compare them to their previous year's test scores. Teachers whose students gained the most are rated as good teachers (they added value to their students). Teachers whose students gained the least are rated bad teachers and are fired.

There are numerous flaws with the using VAM to fire teachers.

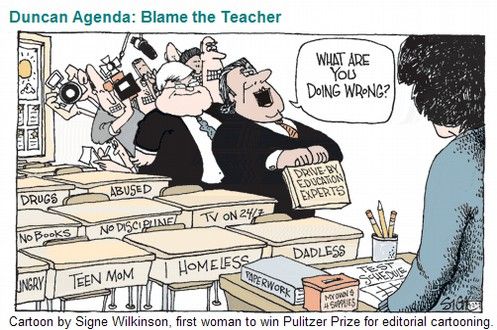

First, VAM scores are unfair to teachers working with students from lower income families. Students from higher income homes gain the most on high stakes tests because they did not have to deal with outside problems like living in a homeless shelter. So VAM results in firing teachers in high poverty schools.

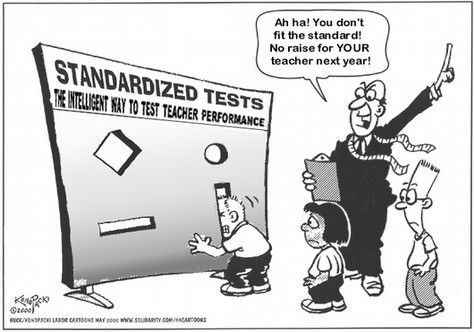

Second, VAM scores are not reliable. Because students assigned to any given teacher have backgrounds that vary greatly from year to year, the value added number assigned to a teacher varies greatly from year to year. A teacher rated as one of the best one year under VAM is likely to be rated one of the worst teachers the next year.

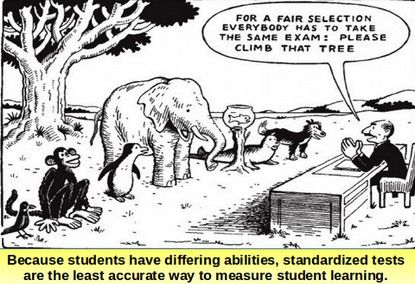

Third, VAM scores are not an accurate measure of student learning. High stakes multiple choice tests only measure very low levels of knowledge – like rote memorization of useless facts – rather than a true ability to solve problems.

Fourth, VAM scores vary dramatically depending on the test given to the students. Value added modeling assumes that the students were given a fair test that gave them a fair chance of passing the test. As we have shown in previous articles, Common Core tests are not fair in that they were deliberately designed to fail two thirds of American students even though American students, when adjusted for poverty, do better than any other students in the world.

Fifth, more than 80 research studies have concluded that using VAM to fire teachers is unfair and unreliable.

To better understand the ridiculousness of Value Added Modeling, we will take a closer look at all five of these problems.

#1...VAM scores are unfair to teachers working with students from lower income families

Students from higher income homes gain the most on high stakes tests because they did not have to deal with outside problems like living in a homeless shelter. So the VAM model results in firing teachers who work in high poverty schools.

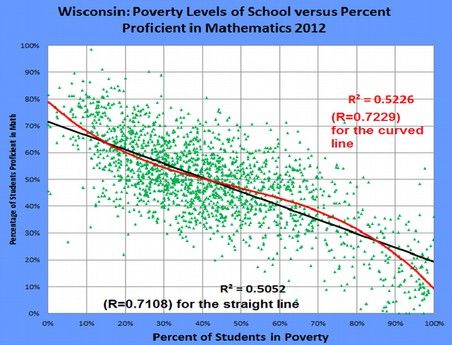

What high stakes tests really measure is the income level of the student

Numerous studies have shown there is a strong relationship between child poverty and student test performance. This is one of the many charts showing that child poverty is strongly related to student test performance.

Student test scores are also strongly influenced by school attendance, student health, family mobility, and the influence of neighborhood peers and classmates who may be relatively more or less advantaged. None of these factors are in the control of the teacher.

#2... VAM scores are extremely unreliable

If value added test scores were reliable, we would expect that teachers who have high scores one year would have high scores the next year. In other words, the good teachers would be good teachers from year to year and the bad teachers would be bad teachers from year to year. But this is not what actually happens. Good teachers one year, according to VAM, could be bad teachers the next year. VAM is no better than a coin toss at predicting which teachers are the good teachers. Teachers rated as being in the top third of all teachers one year are often in the bottom third the next year. You can be rated Teacher of the Year one year and be out of a job the next. Imagine if your job evaluation was dependent on the toss of a coin! Heads you stay. Tails you go.

Here is a quote from one teacher in Houston Texas about their experience with three years of VAM evaluations: “I do what I do every year. I teach the way I teach every year. My first year got me pats on the back; my second year got me kicked in the backside. And for year three, my scores were off the charts. I got a huge bonus, and now I am in the top quartile of all the English teachers. What did I do differently? I have no clue.” (Amrein- Beardsley & Collins, 2012, p. 15)

Because students assigned to any given teacher have backgrounds that vary greatly from year to year, the value added numbers assigned to teachers varies greatly from year to year. A teacher rated as one of the best one year under VAM is very likely to be rated one of the worst teachers the next year.

McCaffrey (2009) did a study of several schools in Florida dividing teachers into five equal groups based on their VAM scores. He found that teachers with the Lowest VAM Scores One Year were likely to have much higher VAM scores the next year. Source: McCaffrey, D. F., Sass, T. R., Lockwood, J. R., & Mihaly, K. (2009). The intertemporal variability of teacher effect estimates. Education Finance and Policy, 4(4), 572–606.

Another study found that across five large urban districts, among teachers who were ranked in the top 20 percent of effectiveness in the first year, fewer than a third were in that top group the next year, and another third moved all the way down to the bottom 40 percent.

Sadly, this problem with VAM being grossly unreliable led to the tragic death of a highly respected 39 year old teacher in the Los Angeles School District. A well liked 5th Grade Teacher committed suicide in 2010 after the Los Angeles Times published the VAM scores of all of the teachers in Los Angeles. This teacher taught at an elementary school with a very high percentage of low income children. Over 60% of the children were Spanish speaking English Language learners. VAM does not make any adjustments for the fact that the students cannot speak English and therefore do poorly on tests that are only printed in English. Naturally, only 5 of 35 teachers were rates by VAM as “average.” The teacher, Mr. Ruelas, had won many awards for being able to work in a bilingual manner to coach and help the children in his classes. But the Los Angeles Times failed to do the research needed to understand that VAM is a SCAM. They published the VAM scores as if they really met something. In our opinion, the Los Angeles Times editors should be prosecuted for reckless manslaughter.

http://www.huffingtonpost.com/2010/09/28/rigoberto-ruelas-suicide-_n_742073.html

#3... VAM scores are not an accurate measure of student learning

High stakes testing is an inaccurate measure of the knowledge of students because it rates them based on how they did on a single day and on a single test rather than on how they did during a full year of work and on many different kinds of assessment methods. Some students who actually learned more during the year may do poorly on multiple choice tests simply because they are bad at taking high stakes tests (called test anxiety). This is why high stakes tests are an inaccurate way of measuring the knowledge of students or the value of teachers.

#4... VAM scores vary dramatically depending on the test given to the students and even the day the test is given to students

Value added modeling assumes that the students were given a fair test that gave them a fair chance of passing the test. As we have shown in previous articles, Common Core tests are not fair in that they were deliberately designed to fail two thirds of American students even though American students, when adjusted for poverty, do better than any other students in the world.

#5... More than 80 research studies have concluded that using VAM to fire teachers is unfair and unreliable

More than 80 studies have been done on using the VAM method to evaluate teachers. They all found that VAM is not a consistent or reliable way to measure teacher performance. Here is a link to a list of these studies. http://vamboozled.com/recommended-reading/value-added-models/

In 2013, Edward Haertel, a Stanford University researcher, published a detailed report on the lack of reliability of using student test scores to evaluate teachers. He concluded that VAM scores were worse than bad. Here is a quote from page 23 of his study: “Teacher VAM scores should emphatically not be included as a substantial factor with a fixed weight in consequential teacher personnel decisions. The information they provide is simply not good enough to use in that way. It is not just that the information is noisy. Much more serious is the fact that the scores may be systematically biased for some teachers and against others...High-stakes uses of teacher VAM scores could easily have additional negative consequences for children’s education.”

http://www.ets.org/Media/Research/pdf/PICANG14.pdf

A 2010 study by the Economic Policy Institute concluded that student standardized test scores are not reliable indicators of how effective any teacher is in the classroom. The authors of the study, called, “Problems with the Use of Student Test Scores to Evaluate Teachers,” included four former presidents of the American Educational Research Association; two former presidents of the National Council on Measurement in Education; the current and two former chairs of the Board of Testing and Assessment of the National Research Council of the National Academy of Sciences; the president-elect of the Association for Public Policy Analysis and Management; the former director of the Educational Testing Service’s Policy Information Center; a former associate director of the National Assessment of Educational Progress; a former assistant U.S. secretary of education; a member of the National Assessment Governing Board; and the vice president, a former president, and three other members of the National Academy of Education.

http://epi.3cdn.net/724cd9a1eb91c40ff0_hwm6iij90.pdf

The Board on Testing and Assessment of the National Research Council of the National Academy of Sciences has stated: “VAM estimates of teacher effectiveness should not be used to make operational decisions because such estimates are far too unstable to be considered fair or reliable.”

2014 American Statistical Association (ASA) Slams VAM

In 2014, the American Statistical Association issued a statement warning that value-added-measurement VAM) is fraught with error, inaccurate, and unstable. For example, the ratings may change if a different test is used. The ASA report said: “Ranking teachers by their VAM scores can have unintended consequences that reduce quality.” American Statistical Association. (2014). ASA Statement on Using Value-Added Models for Educational Assessment. http://www.amstat.org/policy/pdfs/ASA_VAM_Statement.pdf

If student test scores are Unreliable, how can we fairly evaluate teachers?

There are other more reliable ways to measure classroom performance. One of the most reliable ways is an actual classroom observation performed by trained administrators. Nearly all teachers are subject to this kind of annual evaluation which is used to identify teachers in the greatest need of improvement.

Teachers and Principals Deserve Fairness

Every classroom should have a well-educated, professional teacher, and every public school district should recruit, prepare and retain teachers who are qualified to do the job. The problem comes in unfairly evaluating and firing teachers rather than helping teachers. Our students and teachers deserve an evaluation system that is fair and accurate. Diane Ravitch is one of our nation's leading educational researchers. Here is what she has to say about using high stakes testing to fire teachers: “No other nation in the world has inflicted so many changes or imposed so many mandates on its teachers and public schools as we have in the past dozen years. No other nation tests every student every year as we do. Our students are the most over-tested in the world. No other nation—at least no high-performing nation—judges the quality of teachers by the test scores of their students. Most researchers agree that this methodology is fundamentally flawed, that it is inaccurate, unreliable, and unstable.”

http://www.washingtonpost.com/blogs/answer-sheet/wp/2014/01/18/everything-you-need-to-know-about-common-core-ravitch/

Because VAM would unfairly punish teachers and principals for factors such as student poverty that they have no control over, we urge you to oppose Senate Bill 5748 and House Bill 2019. If you have any questions, feel free to emai us.

Regards,

Elizabeth Hanson M. Ed. And David Spring M. Ed.

Coalition to Protect Our Public Schools (dot) org